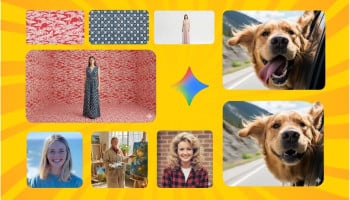

All over Pakistan and around the globe, people are uploading their selfies to AI apps and are seeing themselves back in new forms. One click, and you’re standing next to a favourite celebrity, sharing the space with a political figure, or even having your picture taken with someone who isn’t alive anymore.

Some take it a step further, by using the AI to put their current self next to their childhood face, creating moments that feel like they push on touching, even nostalgic. It’s amusing, it’s trending, and it feels harmless and exciting, but, as always, behind the magic are risks that most users never think about.

The question that silently lingers behind these viral trends is a simple one: is it really safe to upload our images to AI platforms? For most users, the enjoyment of seeing themselves in fantasy portraits eclipses any worry about what happens once the photo is handed over to an app.

When Gadinsider asked Google Gemini if it is safe to upload personal photos, the response clarified just how complicated the question actually is. According to Google Gemini’s private policies, by default, images and conversations are stored, may be reviewed anonymously for purposes of improving the AI system, and may be repurposed to create better products in the future.

Although the users do have some control in how long their data is stored, to turn off saving option entirely, the hidden metadata that lives within the photos, such as location, device, etc., can still add risk as it remains unchanged.

When metadata turns into threat

Explaining this risk further, Taleemabad Head of Engineering, Mashood Rastgar, told Gadinsider: “Most popular tools remove EXIF (Exchangeable Image File Format) data, which contains information like the device used, GPS coordinates, and other details. If a service does not remove this metadata, it poses significant privacy concerns. Geo-tagging, for instance, reveals the exact location where an image was taken. If this information becomes public, it can be very dangerous.”

Researchers have raised issues concerning these risks for years now. A survey article titled as Privacy and Security Concerns in Generative AI, published in 2024 on ResearchGate noted that image models such as diffusion systems may leak private data through a process called "memorisation," meaning that parts of the training images, even revealing or identifiable images, can sometimes be reproduced by the model itself; therefore, it is potentially unsafe to upload your images without safeguards.

Some users have noticed these risks on their own. Abid, a student speaking with Gadinsider, noted his experience: “I thought to give Google Gemini a try after being this viral, and uploaded a photo of half of my face. I was shocked that it then generated my entire face and included a smile that was exactly like mine, a dimple appeared on the left cheek, just the way it appears on my face in real. It suddenly struck me that the AI seemed to have knowledge of things about me that I never actually revealed in the photo. That’s when I thought how does this tool know details about me that I never revealed?”

Long-term risks few consider

This is not a niche concern. From AI tools such as NanoBanana and LensaAI to built-in AI tools developed by apps that social media platforms that make them available, individuals around the globe are sharing their pictures every day, millions of them.

In a national survey undertaken by Elon University in March 2025, it was shown that approximately 52% of adults in the US now use AI tools, such as ChatGPT, in their daily lives.

However, when using such tools, most are unlikely to read the privacy policy before uploading anything, if they stop to think about what happens with their data afterwards, how it may be stored, reused or fed back into training models. Then they might understand that this simple upload may be a long-term input or access into databases which users may have little or no control over.

When asked about the long-term risks of this practice, AI and Technology Trainer Jareeullah Shah told Gadinsider: “It's more of a privacy risk than a security risk in my opinion. So let’s imagine this, you upload your images on platforms like nano banana in 2025, and in two years, the next version of nano banana is trained on the data that you have provided.”

“Someone somewhere in 2027 uses nano to generate an ad that you don’t agree with… and the image generator creates an avatar in this image that has an uncanny resemblance to you because of the data you have uploaded two years prior. I don’t think most people will be very comfortable with that, but that is a real risk,” Shah added.

These risks are not merely theoretical. A study from Cornell University on Google DeepMind in 2023 described stipulations by diffusion-based image generators to recreate authentic training images, presenting an important risk that private information and copyrighted images could emerge in generative outputs.

Similarly, Wired reported in 2024 that a database utilised for the AI image generator, GenNomis, was exposed online, disclosing thousands of images, including sensitive personal photos and other explicit content.

Consent and responsibility in the age of AI

From a legal standpoint, protections for Pakistan remain minimal. Digital Rights Foundation's Executive Director Nighat Dad told Gadinsider: “Pakistan's privacy framework is still evolving, with the ministry struggling to pass the Data Protection Bill. However, constitutional protections such as Article 14, alongside existing laws like the Prevention of Electronic Crimes Act (PECA), provide some protection. PECA penalises unauthorised use of personal data, including images, without consent.”

Public trust is also a reflection of these anxieties. Data from the International Association of Privacy Professionals (IAPP) found that 81% of consumers are worried about AI using their data in ways they would not be comfortable with, and a large proportion said they would not use AI at all because of their privacy concerns.

In a separate report, it indicated that 68% of organisations had admitted that their employees had uploaded sensitive data into AI tools at least once and often without security clearance.

To further address the need for regulation, Dad told Gadinsider: “In Pakistan, stronger human rights-centric regulations regarding data protection are an imperative. The state lacks legal safeguards against AI-specific violations of privacy and security. In the absence of comprehensive Data Protection Laws, there’s no regulatory framework against which to moderate AI systems.”

The question of consent also looms large. Shah stressed: “First of all, consent is paramount; no one should upload any image of a person (public figure or not) without first taking their full consent and informing them the exact reason you are uploading it. Yes, I believe there should be guidelines around this, but the challenge is to enforce the said guidelines.”

While the risks of AI image tools are real, experts say users can take steps to protect themselves. Dad advised: “Firstly, people should refrain from sharing any sensitive personal information, including CNIC numbers, addresses, medical information, etc., with AI tools, even if they seem to have secure encryption. Always alter privacy settings and implement tools that outline how they store and use your data.

"Secondly, always verify the information before getting too comfortable trusting or passing on what an AI tool generates; it spreads misinformation quickly if people assume machines can't be wrong.

"Lastly, you should not depend on the chatbots or ChatGPT as replacement therapies or sources of mental health relief. Chatbots are not regulated and do not pick up on the fine nuances that a human connection will. There has been an overall rise in reports of chatbots and AI tools causing personal harm.”

Rastgar echoed this, stressing the importance of technical safeguards. He told Gadinsider: “Consider the potential consequences: before uploading, think about what could happen to your image and data today or in the future. Prefer paid accounts (where applicable) since free accounts often allow data to be used for training. And always strip metadata from your photos before uploading them.”

Experts agree that while AI-based images may seem fun, every upload has its own cost in terms of privacy and security. Until stronger regulations are in place, people should treat their photos and information with care and think twice before exchanging privacy for some fun.