The internet has been buzzing with anticipation around the Meta Connect 2024 held yesterday in Menlo Park, California.

Along with a slew of its top-of-the-line products and services, Meta at the Meta Connect 2024 launched Llama 3.2, its first-ever large language model (LLM) equipped with the ability to understand both images and text.

Meta Llama 3.2 released at Meta Connect 2024

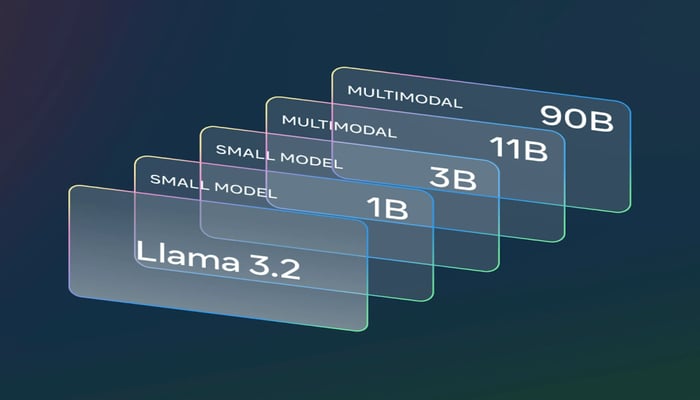

The new Llama upgrade comprises small and medium-sized models alongside lighter text-only models that are compatible with select mobile and edge devices.

“This is our first open-source multimodal model. It’s going to enable a lot of applications that will require visual understanding,” said Meta CEO Mark Zuckerberg during the keynote.

Its 128,000 token context length brings it on par with its predecessor, allowing users to input a massive amount of text. The higher parameters of the Llama 3.2 validate its inherent prowess in responding more accurately to more complex tasks.

In a bid to facilitate developers with a diverse range of environments to work with its LLMs, the Facebook maker also for the first time disbursed official Llama stack distributions. This includes on-prem, on-device, cloud and single-node.

“Open source is going to be — already is — the most cost-effective customizable, trustworthy and performant option out there. We’ve reached an inflexion point in the industry. It’s starting to become an industry standard, call it the Linux of AI,” added Zuckerberg.

Meta Llama 3.1, the preceding model, was rolled out over two months back which Meta says has seen 10x growth.